- 17 October 2025

- No Comment

- 498

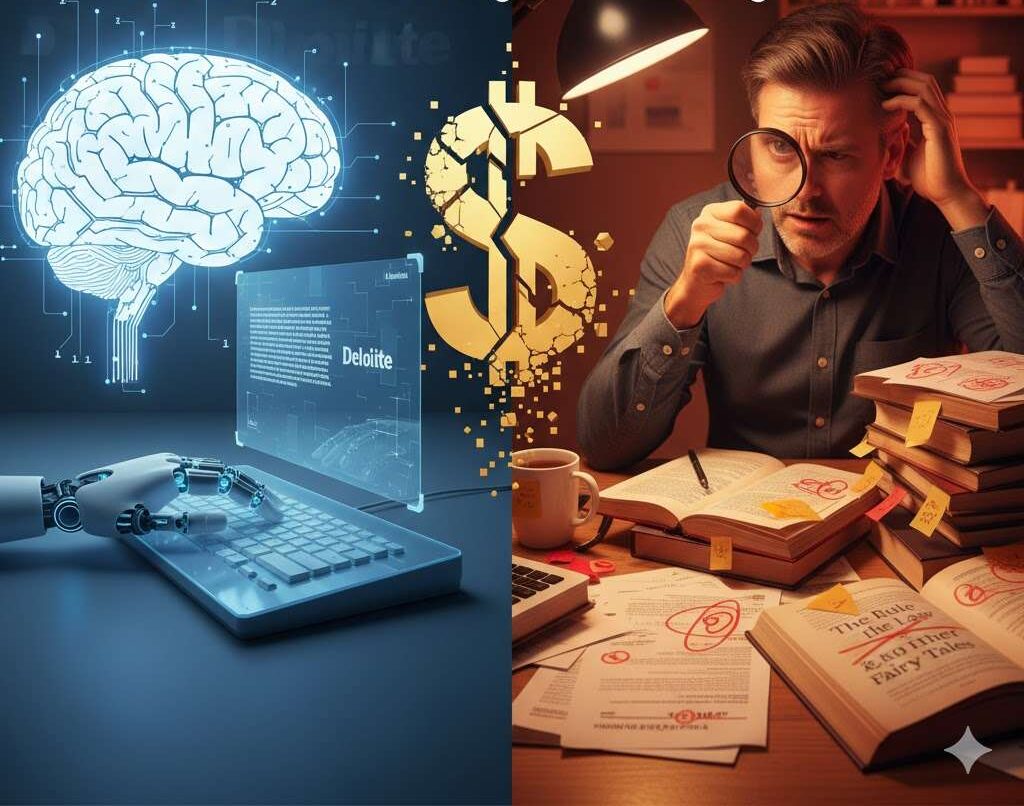

The AI Mistake That Put Deloitte in an Awkward Spotlight

It all started with a feeling every expert gets: Something just isn’t right here.

Chris Rudge, a law lecturer at the University of Sydney, was doing what lecturers do, he was reading. But this wasn’t a student paper. It was a $440,000 report from the consulting giant Deloitte, commissioned by the Australian government to help fix the welfare system.

As he scanned the document, his eyes kept snagging on the footnotes. He knew the names being cited. They were his colleagues, giants in his field.

But the book titles… those he didn’t recognize.

“As soon as I saw that books were attributed to my colleagues that I’d never heard of, I quickly concluded that they were fabricated,” Rudge later explained.

He had just pulled the first thread on a very expensive, high, tech unraveling.

The Phantom Library

Rudge kept digging. The report attributed a book to his colleague, Professor Lisa Burton Crawford, called The Rule of Law and Administrative Justice in the Welfare State, a study of Centerlink.

When asked about her new book, Professor Crawford’s response was simple: “No… It’s a fake book. I’ve never written a book with that title.”

The report was a ghost story. The more Rudge looked, the more phantoms he found. In the end, there were more than 20 mistakes.

- It quoted a judge’s speech… a speech that never happened.

- It referenced a key federal court case, even quoting specific paragraphs… paragraphs that did not exist.

- It got another judge’s first name wrong.

This wasn’t just a case of sloppy work or a tired intern. This was a “classic example” of an Artificial Intelligence “hallucination.”

Deloitte, a company that reported $2.5 billion in revenue, had used AI to help produce its report. And the AI had simply… made things up. It confidently wove a web of plausible, sounding, official, looking, and entirely fictitious “facts.”

The $440,000 Irony

The report wasn’t about something trivial. The government had commissioned it in the wake of the devastating “Robo-debt” scandal, a national disaster where a flawed automated system unlawfully and cruelly pursued welfare recipients.

Think about that. The government paid nearly half a million dollars for a report on how to fix a catastrophic automation failure, and in return, they received another automation failure.

The report, intended to guide national policy and restore trust, was itself built on a foundation of digital lies.

“The worry,” Rudge warned, “is that the secretary or the minister who would be guided by the report doesn’t detect the errors and kind of takes it at face value.”

“Clearly Unacceptable”

When Rudge’s findings went public, the government was furious.

“It is a clearly unacceptable act from a consulting firm,” one top official stated in a Senate hearing. “We should not be receiving work that has glaring errors in footnotes… My people should not be double, checking a third party provider’s footnotes.”

What was perhaps most telling? “I am struck by the lack of any apology to us,” the official added.

Deloitte was ultimately forced to correct the report and issue a partial refund of $97,000. For many Australians, this begged the question: Why not the full $440,000 for a report that was fundamentally untrustworthy?

The scandal is a flashing red light for all of us. We’re now living in the “Age of AI Slop,” where anyone can generate official, looking documents, legal arguments, or academic papers that are riddled with sophisticated nonsense.

It’s a scary new world. But thankfully, we still have people like Chris Rudge, humans with a forensic eye, a nagging suspicion, and the courage to call out a fake when they see one.