- 13 September 2022

- No Comment

- 149

Should we be afraid of Deepfakes?

Should we be afraid of Deepfake?

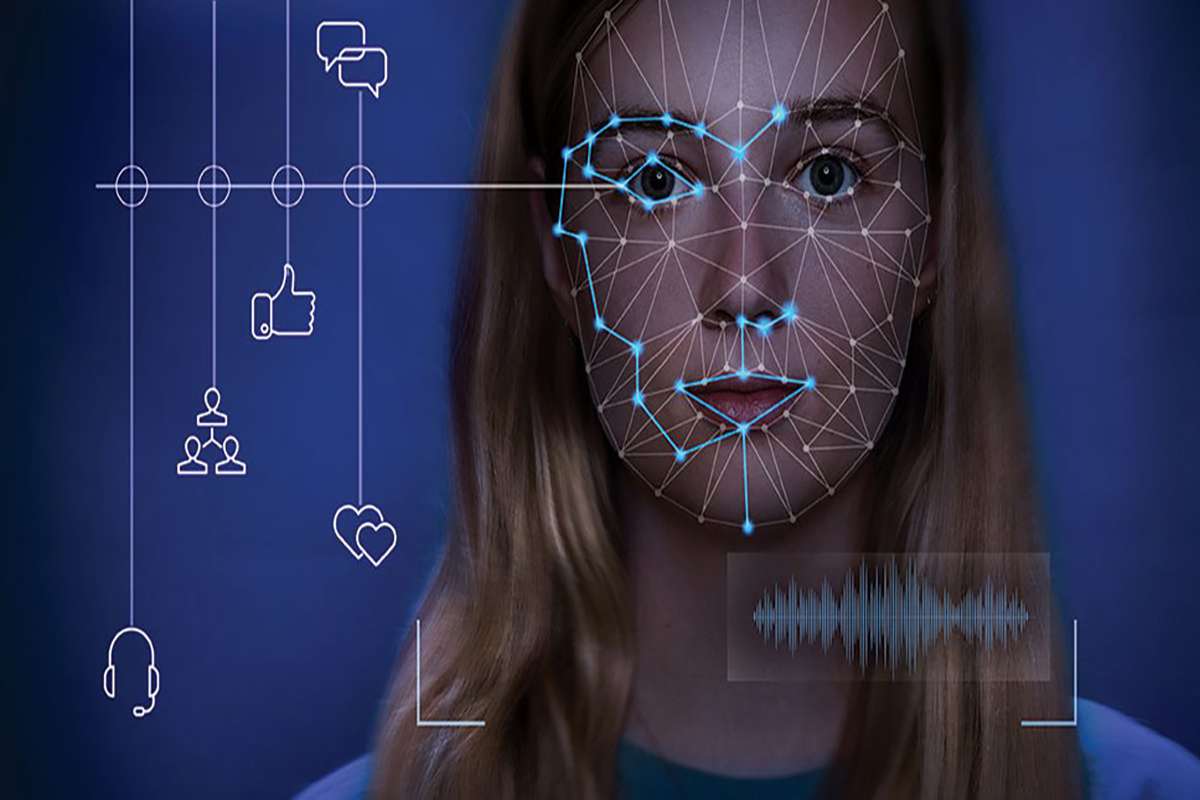

Technology is just an amazing thing that is doing wonders in the different fields of life but as you know that every coin has two sides similarly technology is also making some scary inventions that make us worried and afraid. Here we are talking about one such technology that will freak you out and will leave you in a state of uncertainty. So, should you be afraid that one day your face could be used for misleading content or a dirty video without your consent? Well, this is what a new technology known as Deepfake can do. So, what is Deepfake technology? How does it work? What is the cause of concern? And what safety measures could be taken in this regard? To find out just stick to the article.

What is Deepfake?

Deepfake, an amalgamation of the terms “deep learning” and “fake,” first emerged on the Internet in the year 2017. They are powered by generative adversarial networks, a cutting-edge new deep learning technique (GANs). Anyone with a computer and an internet connection can use deepfake technology to make convincingly realistic images and videos of people saying and acting in ways they would never honestly say or perform themselves. Recently, several deepfake videos have gone viral, offering millions worldwide their first taste of this new technology: President Obama referring to President Trump with an expletive, Mark Zuckerberg acknowledging that Facebook’s real purpose is to control and take advantage of its users, and Bill Hader assuming the persona of Al Pacino on a late-night talk show are just a few examples.

How does it work?

Deepfake uses Generative Adversarial Networks (GAN), a technology in which two algorithms cooperate to create images. Deepfakes are easy to make, even though their technique may seem complex. Deepfake can be created quickly using a variety of internet tools, including Faceswap and ZAO Deepswap. Deepfake enables easy alteration of full videos using consumer-grade gear. It uses contemporary Artificial Intelligence (AI) to automate monotonous cognitive processes like recognizing a person’s face in every frame of a film and replacing it with another face, making the production of such modified videos very cheap.

Every technology or device has pros and cons and the same goes for deepfake. Assistive technology is successfully utilizing Deepfakes for example people with Parkinson’s will converse using voice cloning. Deepfakes are also becoming the bridge between the living and the dead. For instance, the speech synthesis business CereProc, located in Ireland, generated a synthetic voice for John F. Kennedy and used it to reenact his famous speech. Similarly, many people use deepfake technology to see their dead family members or loved ones moving or smiling in a photo or video.

What is the cause of concern?

Deepfake is a perfect tool to defame and dishonored anyone because they generate believable fake news that takes time to debunk. They are also used in spreading misleading information and content. However, the harms brought about by Deepfakes are frequently permanent and irreparable, especially when they impair people’s reputations. The volume of deepfake stuff online is expanding quickly. According to a survey by the firm Deeptrace, there were 7,964 deepfake movies online at the start of 2019; nine months later, that number had increased to 14,678. It has undoubtedly gotten bigger since then. The widespread use of web services like Deep Nostalgia and face-swapping applications demonstrates how fast and extensively deepfakes could be embraced by the general people. This is an alarming situation because with the common use of deep fake videos it becomes very easy for a person to defame or disgraced any other person just by making a simple deepfake video, causing a long-lasting impact on the person’s life and career.

What safety measures could be taken?

Some people believe that AI will be the answer to hazardous deepfake applications because deepfakes are built on AI in the first place. For instance, experts have developed powerful deepfake detection systems that look at lighting, shadows, facial expressions, and other characteristics to identify fake photographs. The addition of a filter to an image file makes it hard to utilize that image to create a deepfake, which is another creative defensive strategy. Software to protect against deepfakes is now being offered by a few startups, including Truepic and Deeptrace. Yet, long-term control of the propagation of deepfakes is unlikely to be achieved with only such technological measures.

So the question is what legislative, political, and societal measures can we implement to protect ourselves from the risks posed by deep fakes? One alluring, straightforward option is enacting legislation that makes it unlawful to produce or disseminate deepfakes videos or photographs. Another effective solution is for digital companies like Facebook, Google, and Twitter to take more stringent voluntary measures to stop the spread of damaging deepfakes. But not one answer will work in the end. Simply raising public awareness of the risks and potential consequences of deepfakes is a crucial first step. Remember the best protection against pervasive misinformation is a well-informed public.

Written by:

Rohma Arshad